Overview of Data Types

Since its inception, the StudyForrest dataset has grown appreciably through both internal and external contributions. Made available are raw data, preprocessed data, and an extensive collection of annotations of the movie. Often, multiple data types are available for the same set of participants — for example, multiple fMRI scans of up to 10 hours total per participant.

Below is an overview of all major components of the StudyForrest dataset. This includes data relating to brain structure, brain function, and movie stimulus properties. Further details for each data type can be found in the linked publications.

Behavior and Brain Function

A diverse set of stimulation paradigms and data acquisition setups were utilized to characterize participant's brain function on a variety of dimensions.

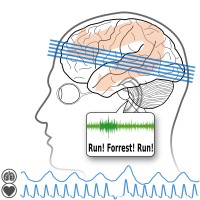

High-res 7T fMRI on 2h Audio Movie (+Cardiac/Respiration)

Publication Explore 7T, audio, cardiac, respiration

Two principle data modalities were acquired: Blood oxygenation level dependent (BOLD) fMRI scans, continuously capturing brain activity at a spatial resolution of 1.4 mm, and physiological recordings of heart beat and breathing. For technical validation, all measurements are also available for a full-length gel phantom scan.

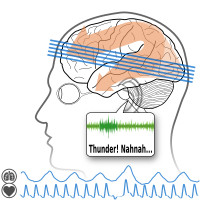

High-res 7T fMRI Listening to Music (+Cardiac/Respiration)

Publication Explore 7T, music, cardiac, respiration

High-resolution, ultra high-field (7 Tesla) functional magnetic resonance imaging (fMRI) data from 20 participants that were repeatedly stimulated with a total of 25 music clips, with and without speech content, from five different genres using a slow event-related paradigm. Physiological recordings of heart beat and breathing were simultaneously recorded as well.

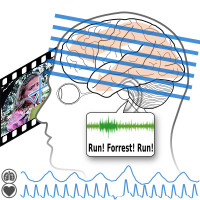

3T fMRI on 2h Movie, Eyegaze (+Cardiac/Respiration)

Publication Explore 3T, audio, eyegaze, cardiac, respiration

Two-hour 3 Tesla fMRI acquisition while 15 participants were shown an audio-visual version of the stimulus motion picture, simultaneously recording eye gaze location, heart beat, and breathing.

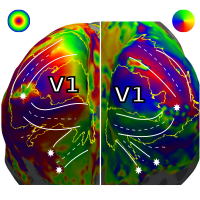

Retinotopic Mapping

Publication 3T, retinotopic mapping, visual cortex, eccentricity, polar angle

Standard 3 mm fMRI recording of a retinotopic mapping procedure with expanding and contracting ring and rotating wedge stimuli. Resulting eccentricity and polar angle maps of the visual cortex of 15 participants are available.

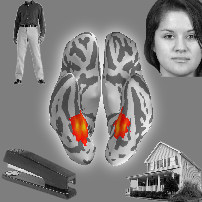

Higher Visual Area Localizer

Publication Explore 3T, visual area localizer, block-design, one-back task

3mm fMRI data from a standard block-design visual area localizer using greyscale images for the stimulus categories human faces, human bodies without heads, small objects, houses and outdoor scenes comprising of nature and street scenes, and phase scrambled images.

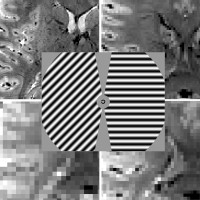

Multi-res 3T/7T fMRI (0.8-3mm) on Visual Orientation

Publication 7T, 3T, visual, oriented gratings, decoding

Ultra high-field 7T and 3T fMRI data recorded at 0.8 (7T-only), 1.4, 2, and 3 mm isotropic voxel size under stimulation with flickering, oriented grating stimuli. Grating orientation in the left and right visual field varied independently to enable decoding analyses.

Brain Structure and Connectivity

A versatile set of structural brain images are available to provide a comprehensive in-vivo assessment of all participants' brain hardware.

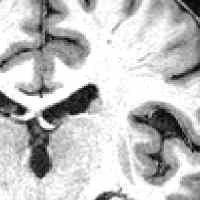

T1-weighted MRI

Publication 3T, T1

An image with 274 sagittal slices (FoV 191.8×256×256 mm) and an acquisition voxel size of 0.7 mm with a 384×384 in-plane reconstruction matrix (0.67 mm isotropic resolution) was recorded using a 3D turbo field echo (TFE) sequence (TR 2500 ms, inversion time (TI) 900 ms, flip angle 8 degrees, echo time (TE) 5.7 ms, bandwidth 144.4 Hz/px, Sense reduction AP 1.2, RL 2.0, scan duration 12:49 min).

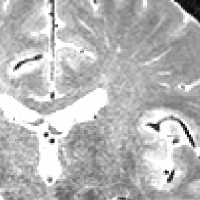

T2-weighted MRI

Publication 3T, T2

A 3D turbo spin-echo (TSE) sequence (TR 2500 ms, TEeff 230 ms, strong SPIR fat suppression, TSE factor 105, bandwidth 744.8 Hz/px, Sense reduction AP 2.0, RL 2.0, scan duration 7:40 min) was used to acquire an image whose geometric properties otherwise match the T1-weighted image.

Susceptibility-weighted MRI

Publication 3T, SWI

An image with 500 axial slices (thickness 0.35 mm, FoV 181×202×175 mm) and an in-plane acquisition voxel size of 0.7 mm reconstructed at 0.43 mm (512×512 matrix) was recorded using a 3D Presto fast field echo (FFE) sequence (TR 19 ms, TE shifted 26 ms, flip angle 10 degrees, bandwidth 217.2 Hz/px, NSA 2, Sense reduction AP 2.5, FH 2.0, scan duration 7:13 min).

Diffusion-weighted MRI

Publication Explore 3T, DTI

Diffusion data were recorded with a diffusion-weighted single-shot spin-echo EPI sequence (TR 9545 ms, TE 80 ms, strong SPIR fat suppression, bandwidth 2058.4 Hz/px, Sense reduction AP 2.0) using 32 diffusion-sensitizing gradient directions with b=800smm2b=800smm2 (two samples for each direction), 70 slices (thickness of 2 mm and an in-plane acquisition voxel size of 2×2 mm, reconstructed at 1.7×1.7 mm, 144×144 in-plane matrix, FoV 224×248×140 mm). Acquisition duration was 12:38 min.

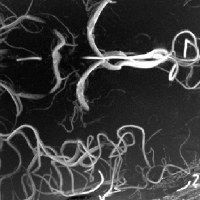

Angiography

Publication 7T, angiography

A 3D multi-slab time-of-flight angiography was recorded at 7 Tesla for the FoV of the fMRI recording. Four slabs with 52 slices (thickness 0.3 mm) each were recorded (192×144 mm FoV, in-plane resolution 0.3×0.3 mm, GRAPPA acceleration factor 2, phase encoding direction right-to-left, 15.4% slice oversampling, 24 ms TR, 3.84 ms TE).

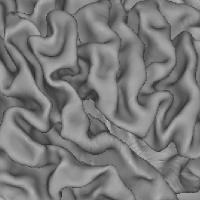

Cortical Surface Reconstruction

Publication Explore derivative

Reconstructed cortical surfaces meshes and various associated estimates were generated from the T1 and T2-weighted images of each participant using the FreeSurfer 5.3 image segmentation and reconstruction pipeline.

Movie Stimulus Annotations

Annotating the content of the Forrest Gump movie, the key stimulus used in the project, is an open-ended endeavour. Its naturalistic nature is rich in diverse visual and auditory features, but also the facets of social communication enables and requires a versatile description.

Cuts, Depicted Locations, and Temporal Progression

Publication movie, annotation, cut, scenes, time progression, location

The exact timing of each of the 870 shots, and the depicted location after every cut with a high, medium, and low level of abstraction. Additionally, four classes are used to distinguish the differences of the depicted time between shots. Each shot is also annotated regarding the type of location (interior/exterior) and time of day. Cuts were initially detected using an automated procedure and were then later curated by hand.

Speech

Publication movie, soundtrack, annotation, speech, grammar, word, dialog

The exact timing of each of the more than 2,500 spoken sentences, 16,000 words (including 202 non-speech vocalizations), 66,000 phonemes, and their corresponding speaker. Additionally, every word is associated with a grammatical category, and its syntactic dependencies are defined.

Portrayed Emotions

Publication movie, annotation, emotion, arousal, valence, cue

Description of portrayed emotions in the movie and the audio description stimulus. The nature of an emotion is characterized with basic attributes such as onset, duration, arousal, and valence — as well as explicit emotion category labels and a record of the perceptual evidence for the presence of an emotion.

Semantic Conflict

Publication movie, annotation, lies, irony

Identification of episodes with portrayal of lies, irony, or sarcasm by three independent observers.

Body Contact

Description movie, annotation, body parts, touch, body language

A detailed description of all body contact events in the movie, including timing, actor and recipient, body parts involved, intensity and valence of the touch, and any potential audio cues.

Eye Movement Labels

Publication movie, annotation, eye gaze, saccades, fixations, smooth pursuit

Classification of eye movements for two groups of 15 participants watching the movie: one group inside an MRI scanner and another group in a laboratory setting. Saccades, post-saccadic oscillations, fixations, and smooth pursuit events are distinguished.

Music

GitHub repository music, annotations, soundtrack

Timing, artist, song title, and release year of every song in the movie's soundtrack.

Low-Level Perceptual Confounds

GitHub repository movie, soundtrack, annotation

Frame-wise (40 milliseconds) annotations of auditory and visual low-level confounds in the audio-description and audio-visual movie: e.g. root-mean square power (a.k.a. volume), left-right volume difference, brightness of each movie frame, and perceptual difference of each movie frame in respect to its previous frame.

Participant/Acquisition Summary

The following table shows what data are available for each participant. Participant IDs are consistent across all acquisitions.

Raw and preprocessed data were released over time as several datasets, and are typically available from multiple locations. If you cannot locate a dataset component you are interested in, please get in touch. Likewise, if you want to re-share data that was preprocessed in a particular way, please contact info@studyforrest.org.

| Participant ID | 1-3 | 4 | 5 | 6 | 7-8 | 9-10 | 11-13 | 14-15 | 16-17 | 18 | 19 | 20 | 21 | 22-36 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Structural MRI | ||||||||||||||

| T1, T2, DWI, SWI, Angiography | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Natural Stimulation | ||||||||||||||

| 2h audio movie (7T, +cardiac/resp) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| 2h audio-visual movie (3T, eyegaze, +cardiac/resp) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| 2h audio-visual movie (in-lab eyetracking) | ✓ | |||||||||||||

| Task fMRI | ||||||||||||||

| Listening to music (7T, +cardiac/resp) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Retinotopic mapping (3T) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Visual area localizer (3T) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| Flickering oriented gratings (7T @ 0.8, 1.4, 2, and 3mm) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||

| Flickering oriented gratings (3T @ 1.4, 2, and 3mm) | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| Preprocessed Data | ||||||||||||||

| FreeSurfer cortical surface reconstruction | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Participant and scan-specific template MRI images (for alignment, masking, structural properties) & (non-)linear transformations between image spaces | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Per-participant aligned fMRI data for within-subject analysis | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Audio-visual movie fMRI aggregate ROI timeseries for Shen et al. (2013) cortex parcellation | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||